When rendering translucent surface, using Depth Fade(Difference between surface pixel depth and depth of the scene pixel behind it) to control opacity is a common way of simulation transmission of light through translucent body. It is frequently used for water rendering.

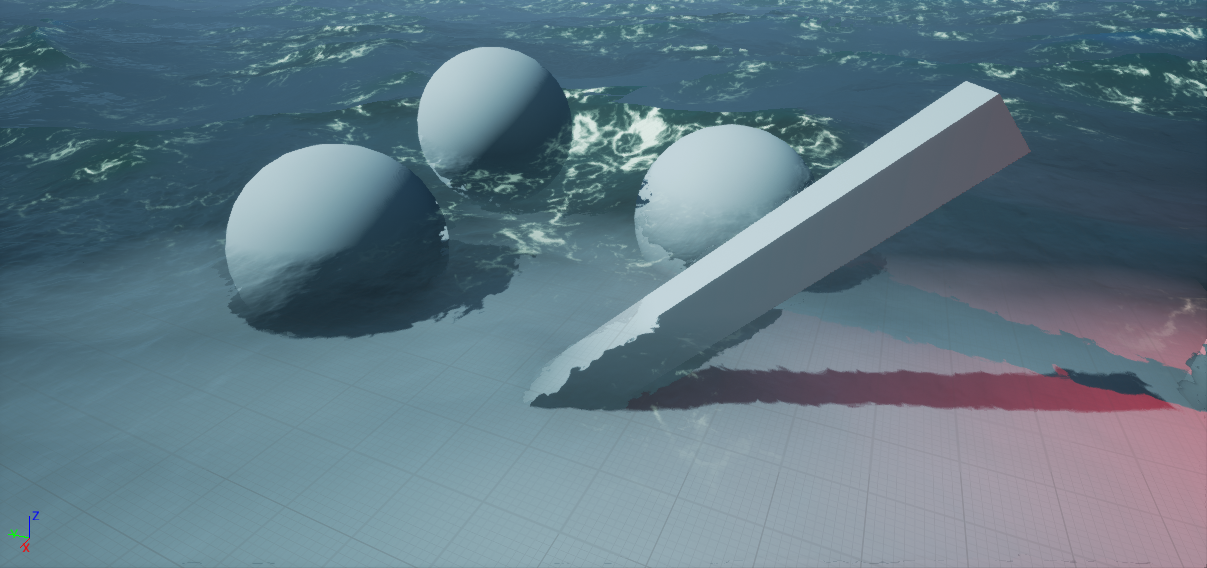

Unfortunately, when Depth Fade is used together with Screen-Space Distortion(also known as refraction), it generates visual artifacts:

https://image.prntscr.com/image/vvC0b0IlQoKe0bYEBjtKTA.png

Artifacts are caused by the fact that depth fade is calculated without taking distortion into account, and you are seeing both distorted(marked with green) and undistorted(marked with red) surfaces:

https://image.prntscr.com/image/TiZmKCPYS2qzGMT9Us-D0Q.png

I think that nobody would dare to argue, that the artifacts, visible in the picture, are not okay to live with.

There are several workarounds for the issue(for water in particular). One of them would be leaving water opacity on a quite low value and calculating fog for every pixel underwater, accounting for distance, the light has traveled underwater. Applicable to UE4, that would require you to calculate underwater fog in additional post process step, that has to be done before translucency. It would be quite hungry too, since you would need to calculate water intersection with view vector for each pixel. Additionally, you would need to duplicate the calculations in all translucent materials, that would be rendered after mentioned post process pass.

Instead of that I suggest adjusting the way, depth fade is calculated.

We need to match scene depth, that is used to calculate depth fade with distorted scene depth.

Distortion in UE4 is calculated by accumulating distortion from every refractive object and we do not have access to results of this calculation that early in the pipeline.

But we can duplicate distortion calculation of a single object in material in exactly the same way, as it is calculated in distortion pass. That should bring us to a point of virtually no visual complications, in case when water surface is the only refractive object with depth fade in the scene. It may sound restrictive, but the cases, when you would have two highly dynamic, refractive surfaces with overlaid ontop of each other, are quite rare. Even if not, in most cases the visual impact is acceptable.

Anyway, the core idea is:

- In translucent surface material, calculate distortion offsets, the same way, as they would have been calculated in distortion pass

- Sample scene depth using screen coordinates plus distortion offset.

- Perform depth fade using distorted coordinates.

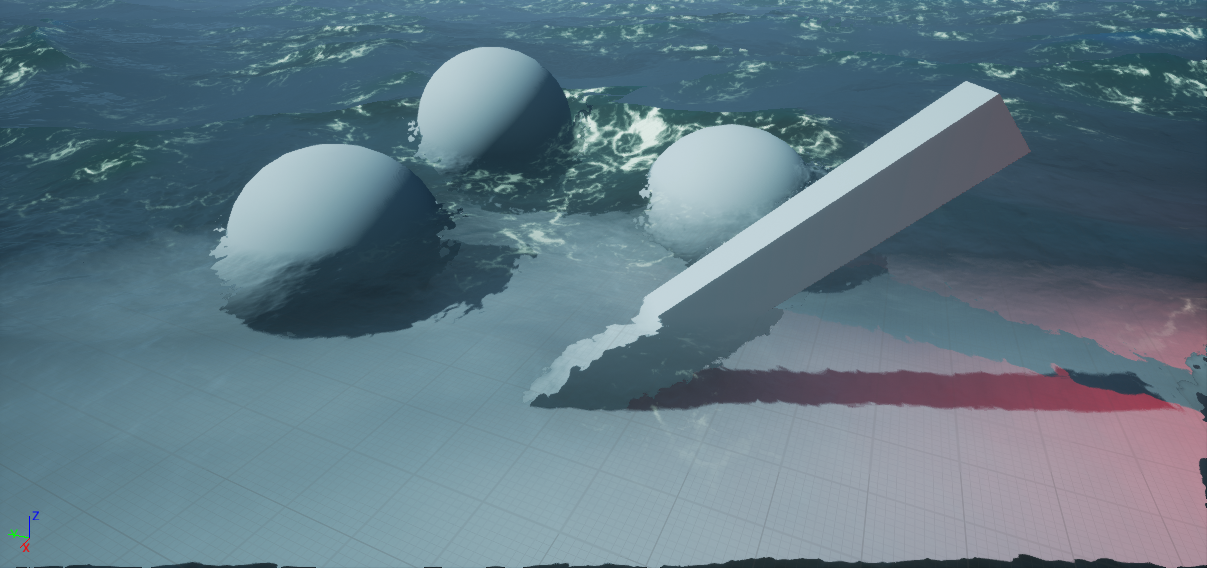

After doing so, this is what we should get:

https://image.prntscr.com/image/s5djkOufT7SAmCqqzwmUWQ.png

And here is an example how to implement it:

You can grab custom node code here. (Must have a linked github account.)

Just copy/paste the whole file contents into a custom material expression.

Custom node inputs:

- Refraction - Plug in the same node network, as in Refraction material input pin.

- Normal - Plug in the same node network, as in Normal material input pin.

- Opacity - Same as Opacity input on Depth Fade material function.

- DepthFadeDistance - Same as FadeDistance on Depth Fade material function.

Custom node outputs:

- R - Distorted Screen Space coordinates, x

- G - Distorted Screen Space coordinates, y

- B - Distorted Scene Depth

- A - Distortion-Aware depth fade(same as Depth Fade material function output

Example Material Graph:

https://image.prntscr.com/image/w5tQ_TegShuPRZqMvmnCFg.png

In the custom node code there is a USE_MIRRORED_WRAP_EDGES define that you can change, to toggle edge mirroring code kindly provided by Kalle_H. It is enabled by default.

Old irrelevant WIP post under the spoiler:

[SPOILER]

I’ve ran into a bit of a complication with screen-space distortion(aka refraction), when used together with depth fade.

https://image.prntscr.com/image/31_pY29MTGqdOZCVTf89Dw.png

Well that is pretty much expected and common sense tells me that I should switch to depth fading using distorted scene depth.

My problem is that I can’t match distortion, even if the math looks to be exactly the same as in distortion shader.

This is the closest I could get:

https://image.prntscr.com/image/TOplEoUQShaW86Bq2Nci5Q.png

It is lousily close, and distorted depth fade seems to roughly follow distortion, but it is not anywhere near perfect match I’d expect and I am somewhat lost in searching for a cause of discrepancy.

I would appreciate any assistance.

[/SPOILER]